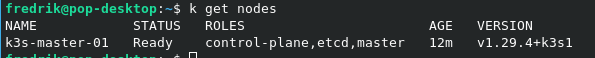

Homelab overhaul! Part two

Today the migration from old and scuffed k3s cluster to new and shiny k3s started. This will all be done with the old saying "slow and steady wins the race", as Proxmox LXC containers are not something i am very familiar with.

Following a guide from Garret Mills i crafted first one container with rocky linux as template image.

Each node got:

- 8gb boot disk

- 4gb of ram

- 1 cpu core

- static ip in my new homelab vlan

the resources will be monitored to see if i need to adjust them

Pre configuration

On the proxmox host we must find the /etc/pve/lxc directory and find the conf file with the number of our container in example: 102.conf, and add the following lines:

lxc.apparmor.profile: unconfined

lxc.cgroup.devices.allow: a

lxc.cap.drop:

lxc.mount.auto: "proc:rw sys:rw"

now we can start the container.

While we are still in the terminal of the proxmox host we must publish the kernel boot configuratin to the container

pct push <container id> /boot/config-$(uname -r) /boot/config-$(uname -r)

Inside the container we need to make sure that /dev/kmsg exists. Kubelet uses this for some logging functions. A simple way to do it is to alias it to /dev/console. I created the file /usr/local/bin/conf-kmsg.sh with the content:

#!/bin/sh -e

if [ ! -e /dev/kmsg ]; then

ln -s /dev/console /dev/kmsg

fi

mount --make-rshared /

This symlinks /dev/console to /dev/kmsg if kmsg does not exist. To make this run at boot I created a service

/etc/systemd/system/conf-kmsg.service

[Unit]

Description=Make sure /dev/kmsg exists

[Service]

Type=simple

RemainAfterExit=yes

ExecStart=/usr/local/bin/conf-kmsg.sh

TimeoutStartSec=0

[Install]

WantedBy=default.target

and enabled it with

chmod +x /usr/local/bin/conf-kmsg.sh

systemctl daemon-reload

systemctl enable --now conf-kmsg

the very last step of preconfig is to install nfs utils. So that our pods can mount NFS.

dnf install nfs-utils

now we can shut down this container before we right click it and choose "convert to template"

Installing k3s

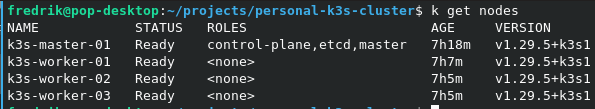

I want to have a high availability cluster, with 3 master nodes. So i left the previous guide and headed to k3s docs and read the docs for it.

First master node is created by right clicking my template and cloning it. I chose linked clone, and it has worked good so far. After it has started i ran the command:

curl -sfL https://get.k3s.io | K3S_TOKEN=SECRET sh -s - server \

--cluster-init

After it is completed i copied the kubeconfig from /etc/rancher/k3s/k3s.yaml to my local machine and edited the ip, so i could connect with kubectl from my local computer.

I continued to create 3 worker nodes to join the cluster with the command:

curl -sfL https://get.k3s.io | K3S_TOKEN=SECRET sh -s - server \

--server https://<ip or hostname of server1>:6443

and we now have a cluster! Not high availability yet, But when i have migrated my services from the old cluster I will have some free hardware that will get proxmox as well. So i spread the master nodes on seperate hardware.

The cluster is now ready to use and in my next post i will start the migration of services! Stay tuned for more updates in

Comments ()