K8s native sidecar with VPN

Yesterday i stumbled upon Gluetun docker image. Mainly for use in docker, but people have used it as a sidecar in kubernetes. But I have not seen it being used with the native sidecars that were implemented in v1.28.

As all containers inside the pod share network, having a sidecar connecting to VPN will channel all the traffic through the tunnel. And with the new native sidecars we can start it, and wait for connection before starting main container. In the shutdown phase the main container will shut down before the sidecar. Ensuring to keep the tunnel up during the entire time.

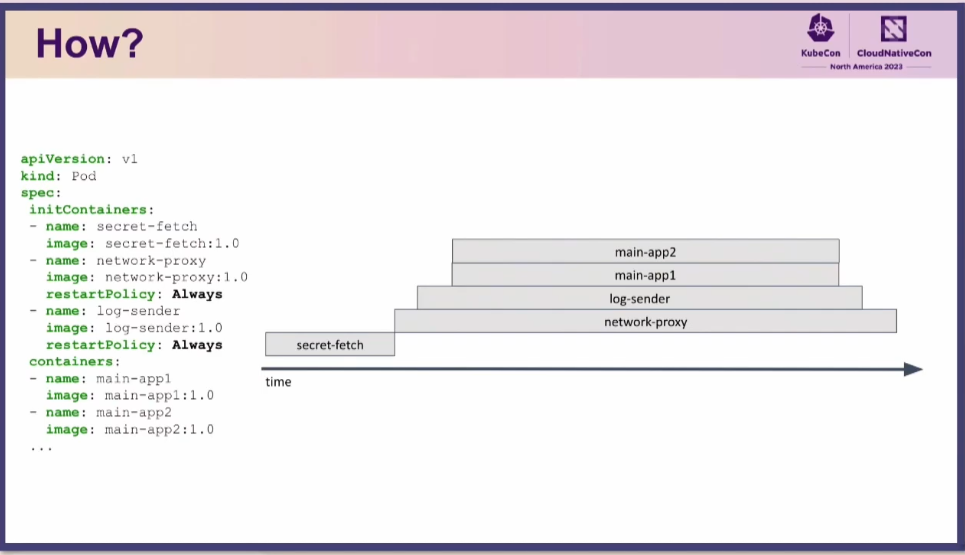

The native sidecars are defines as initContainer, but with restartPolicy: always

A simple gluetun sidecar could look something like this:

initContainers:

- name: gluetun

image: qmcgaw/gluetun:v3.36.0

restartPolicy: Always

securityContext:

capabilities:

add:

- NET_ADMIN

env:

- name: VPN_SERVICE_PROVIDER

value: windscribe

- name: VPN_TYPE

value: openvpn

- name: OPENVPN_USER

value: your_username

- name: OPENVPN_PASSWORD

value: your_password

# kubernetes spesifics

- name: DNS_KEEP_NAMESERVER

value: "on"

- name: FIREWALL_OUTBOUND_SUBNETS

value: "10.42.0.0/15"

DNS_KEEP_NAMESERVER is needed to use kube-dns

FIREWALL_OUTBOUND_SUBNETS 10.42.0.0/15

covers both 10.42.0.0/16 and 10.43.0.0/16

Now, this sidecar container will provide you with a VPN connection from the pod. While also having the kubernetes IPs and DNS available. If your application does not need to contact other services in your cluster you can skip the DNS_KEEP_NAMESERVER. Keep in mind that using kubernetes DNS might result in a DNS leak! And if you prefer wireguard, the container image supports that as well.

But this does not wait for the VPN to connect before starting the main container. But that we can solve easily with another init container. It is important that in your .yaml file, the VPN container is listed before the one we are adding now.

- name: ping

image: busybox

command:

- sh

- -c

- |

sleep 30

while ! ping -c 1 8.8.8.8; do

echo "Ping failed, retrying in 5"

sleep 5

done

echo "ping succesfull, exiting"

And with that your main container will not start until this init container can reach the IP you have set for it to ping. The main container I used for this experiment was a simple curl pod that checks for public IP. It never once retrieved my actual public IP. And it shuffled connections on each restart. All in all I am super happy with how this works, and it has simplified one of my pods drastically.

Entire file i used for testing:

apiVersion: v1

kind: Pod

metadata:

labels:

run: curlpod

name: curlpod

spec:

initContainers:

- name: vpn

image: qmcgaw/gluetun:v3.36.0

restartPolicy: Always

securityContext:

capabilities:

add:

- NET_ADMIN

env:

- name: VPN_SERVICE_PROVIDER

value: windscribe

- name: VPN_TYPE

value: openvpn

- name: OPENVPN_USER

value: your_user

- name: OPENVPN_PASSWORD

value: your_password

- name: DNS_KEEP_NAMESERVER

value: "on"

- name: FIREWALL_OUTBOUND_SUBNETS

value: "10.42.0.0/15"

- name: ping

image: busybox

command:

- sh

- -c

- |

while ! ping -c 1 8.8.8.8; do

echo "Ping failed, retrying in 5"

sleep 5

done

echo "ping succesfull, exiting"

containers:

- args:

- /bin/sh

- -c

- while true; do curl ifconfig.co; sleep 15; done

image: curlimages/curl

imagePullPolicy: Always

name: curlpod

restartPolicy: Never

sources:

- CNCF youtube video from kubecon 2023

- Official kubernetes blog

Comments ()